The Final Guide to PostgreSQL Recordsdata Alternate Tracking

PostgreSQL, one in all the most smartly-appreciated databases, used to be named DBMS of the twelve months 2023 by DB-Engines Rating and is passe extra than any diversified database amongst startups per HN Hiring Trends.

The SQL long-established has integrated aspects connected to temporal databases since 2011, which allow storing files adjustments over time reasonably than perfect the most fresh files narrate. On the opposite hand, relational databases don’t totally apply the factors. Within the case of PostgreSQL, it doesn’t give a enhance to these aspects, even supposing there has been a submitted patch with some discussions.

Let’s uncover 5 alternative techniques of knowledge swap tracking in PostgreSQL available to us in 2024.

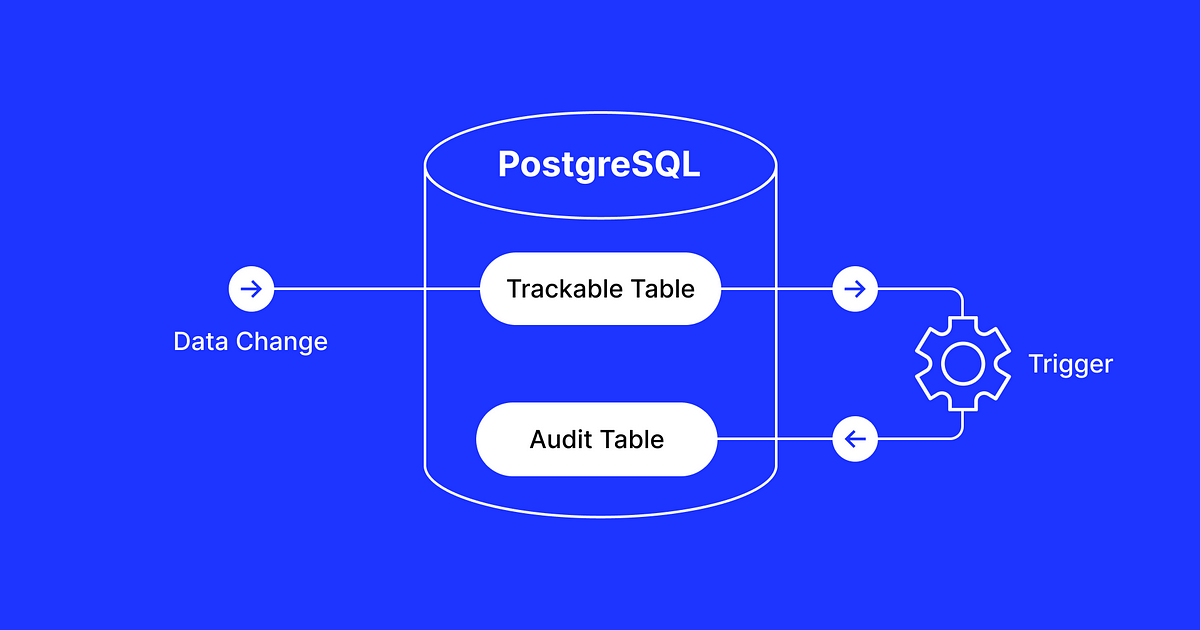

PostgreSQL lets in including triggers with custom procedural SQL code performed on row adjustments with INSERT, UPDATE, and DELETE queries. The first rate PostgreSQL wiki describes a generic audit trigger characteristic. Let’s maintain a fleet maintain a look at a simplified example.

First, assemble a desk called logged_actions in a separate schema called audit:

CREATE schema audit;CREATE TABLE audit.logged_actions (

schema_name TEXT NOT NULL,

table_name TEXT NOT NULL,

user_name TEXT,

action_tstamp TIMESTAMP WITH TIME ZONE NOT NULL DEFAULT current_timestamp,

trek TEXT NOT NULL CHECK (trek IN ('I','D','U')),

original_data TEXT,

new_data TEXT,

request TEXT

);

Subsequent, assemble a characteristic to insert audit records and set a trigger on a desk you maintain to trace, akin to my_table:

CREATE OR REPLACE FUNCTION audit.if_modified_func() RETURNS TRIGGER AS $body$

BEGIN

IF (TG_OP = 'UPDATE') THEN

INSERT INTO audit.logged_actions (schema_name,table_name,user_name,trek,original_data,new_data,request)

VALUES (TG_TABLE_SCHEMA::TEXT,TG_TABLE_NAME::TEXT,session_user::TEXT,substring(TG_OP,1,1),ROW(OLD.*),ROW(NEW.*),current_query());

RETURN NEW;

elsif (TG_OP = 'DELETE') THEN

INSERT INTO audit.logged_actions (schema_name,table_name,user_name,trek,original_data,request)

VALUES (TG_TABLE_SCHEMA::TEXT,TG_TABLE_NAME::TEXT,session_user::TEXT,substring(TG_OP,1,1),ROW(OLD.*),current_query());

RETURN OLD;

elsif (TG_OP = 'INSERT') THEN

INSERT INTO audit.logged_actions (schema_name,table_name,user_name,trek,new_data,request)

VALUES (TG_TABLE_SCHEMA::TEXT,TG_TABLE_NAME::TEXT,session_user::TEXT,substring(TG_OP,1,1),ROW(NEW.*),current_query());

RETURN NEW;

END IF;

END;

$body$

LANGUAGE plpgsql;CREATE TRIGGER my_table_if_modified_trigger

AFTER INSERT OR UPDATE OR DELETE ON my_table

FOR EACH ROW EXECUTE PROCEDURE if_modified_func();

As soon because it’s done, row adjustments made in my_table will assemble records in audit.logged_actions:

INSERT INTO my_table(x,y) VALUES (1, 2);

SELECT FROM audit.logged_actions;

Within the occasion you maintain to maintain to additional enhance this resolution by the employ of JSONB columns reasonably than TEXT, ignoring adjustments in certain columns, pausing auditing a desk, etc, test up on the SQL example on this audit-trigger repo and its forks.

Downsides

- Efficiency. Triggers add efficiency overhead by inserting additional records synchronously on each and every

INSERT,UPDATE, andDELETEoperation. - Security. Any individual with superuser entry can adjust the triggers and originate overlooked files adjustments. It is also the truth is helpful to originate certain that records within the audit desk can no longer be modified or eliminated.

- Upkeep. Managing complex triggers across many consistently changing tables can change into cumbersome. Making a tiny mistake in an SQL script can damage queries or files swap tracking efficiency.

This vogue is equivalent to the earlier one but reasonably than writing files adjustments within the audit desk without lengthen, we pass them through a pub/sub mechanism through a trigger to yet any other system devoted to discovering out and storing these files adjustments:

CREATE OR REPLACE FUNCTION if_modified_func() RETURNS TRIGGER AS $body$

BEGIN

IF (TG_OP = 'UPDATE') THEN

PEFORM pg_notify('data_changes', json_build_object(

'schema_name', TG_TABLE_SCHEMA::TEXT,

'table_name', TG_TABLE_NAME::TEXT,

'user_name', session_user::TEXT,

'trek', substring(TG_OP,1,1),

'original_data', jsonb_build(OLD),

'new_data', jsonb_build(NEW)

)::TEXT);

RETURN NEW;

elsif (TG_OP = 'DELETE') THEN

PEFORM pg_notify('data_changes', json_build_object(

'schema_name', TG_TABLE_SCHEMA::TEXT,

'table_name', TG_TABLE_NAME::TEXT,

'user_name', session_user::TEXT,

'trek', substring(TG_OP,1,1),

'original_data', jsonb_build(OLD)

)::TEXT);

RETURN OLD;

elsif (TG_OP = 'INSERT') THEN

PEFORM pg_notify('data_changes', json_build_object(

'schema_name', TG_TABLE_SCHEMA::TEXT,

'table_name', TG_TABLE_NAME::TEXT,

'user_name', session_user::TEXT,

'trek', substring(TG_OP,1,1),

'new_data', jsonb_build(NEW)

)::TEXT);

RETURN NEW;

END IF;

END;

$body$

LANGUAGE plpgsql;CREATE TRIGGER my_table_if_modified_trigger

AFTER INSERT OR UPDATE OR DELETE ON my_table

FOR EACH ROW EXECUTE PROCEDURE if_modified_func();

Now it’s that you just have to well perhaps moreover imagine to flee a separate direction of running as a worker that listens to messages containing files adjustments and stores them one by one:

LISTEN data_changes;

Downsides

- “At most as soon as” shipping. Hear/instruct notifications are no longer continued that diagram if a listener disconnects, it could possibly probably perhaps perhaps moreover simply miss updates that came about sooner than it reconnected any other time.

- Payload dimension limit. Hear/instruct messages maintain a most payload dimension of 8000 bytes by default. For increased payloads, it’s the truth is helpful to retailer them within the DB audit desk and ship most bright references of the records.

- Debugging. Troubleshooting points connected to triggers and listen to/instruct in a production atmosphere could well perhaps moreover be no longer easy ensuing from their asynchronous and dispensed nature.

Within the occasion you’ve gotten control over the codebase that connects and makes files adjustments in a PostgreSQL database, then one in all the next alternatives is also available to you:

- Manually sage all files adjustments when issuing

INSERT,UPDATE, andDELETEqueries - Use existing open-provide libraries that mix with original ORMs

Shall we grunt, there is paper_trail for Ruby on Rails with ActiveRecord and django-straightforward-history for Django. At a excessive stage, they employ callbacks or middlewares to insert additional records into an audit desk. Right here’s a simplified example written in Ruby:

class Particular person < ApplicationRecord

after_commit :track_data_changes private

def track_data_changes

AuditRecord.create!(auditable: self, changes: changes)

end

end

On the application level, Event Sourcing can also be implemented with an append-only log as the source of truth. But it’s a separate, big, and exciting topic that deserves a separate blog post.

Downsides

- Reliability. Application-level data change tracking is not as accurate as database-level change tracking. For example, data changes made outside an app will not be tracked, developers may accidentally skip callbacks, or there could be data inconsistencies if a query changing the data has succeeded but a query inserting an audit record failed.

- Performance. Manually capturing changes and inserting them in the database via callbacks leads to both runtime application and database overhead.

- Scalability. These audit tables are usually stored in the same database and can quickly become unmanageable, which can require separating the storage, implementing declarative partitioning, and continuous archiving.

Change Data Capture (CDC) is a pattern of identifying and capturing changes made to data in a database and sending those changes to a downstream system. Most often it is used for ETL to send data to a data warehouse for analytical purposes.

There are multiple approaches to implementing CDC. One of them, which doesn’t intersect with what we have already discussed, is a log-based CDC. With PostgreSQL, it is possible to connect to the Write-Ahead Log (WAL) that is used for data durability, recovery, and replication to other instances.

PostgreSQL supports two types of replications: physical replication and logical replication. The latter allows decoding WAL changes on a row level and filtering them out, for example, by table name. This is exactly what we need to implement data change tracking with CDC.

Here are the basic steps necessary for retrieving data changes by using logical replication:

1. Set wal_level to logical in postgresql.conf and restart the database.

2. Create a publication like a “pub/sub channel” for receiving data changes:

CREATE PUBLICATION my_publication FOR ALL TABLES;

3. Create a logical replication slot like a “cursor position” in the WAL:

SELECT FROM pg_create_logical_replication_slot('my_replication_slot', 'wal2json')

4. Fetch the latest unread changes:

SELECT FROM pg_logical_slot_get_changes('my_replication_slot', NULL, NULL)

To implement log-based CDC with PostgreSQL, I would recommend using the existing open-source solutions. The most popular one is Debezium.

Downsides

- Limited context. PostgreSQL WAL contains only low-level information about row changes and doesn’t include information about an SQL query that triggered the change, information about a user, or any application-specific context.

- Complexity. Implementing CDC adds a lot of system complexity. This involves running a server that connects to PostgreSQL as a replica, consumes data changes, and stores them somewhere.

- Tuning. Running it in a production environment may require a deeper understanding of PostgreSQL internals and properly configuring the system. For example, periodically flushing the position for a replication slot to reclaim WAL disk space.

To overcome the challenge of limited information about data changes stored in the WAL, we can use a clever approach of passing additional context to the WAL directly.

Here is a simple example of passing additional context on row changes:

CREATE OR REPLACE FUNCTION if_modified_func() RETURNS TRIGGER AS $body$

BEGIN

PERFORM pg_logical_emit_message(true, 'my_message', 'ADDITIONAL_CONTEXT'); IF (TG_OP = 'DELETE') THEN

RETURN OLD;

ELSE

RETURN NEW;

END IF;

END;

$body$

LANGUAGE plpgsql;

CREATE TRIGGER my_table_if_modified_trigger

AFTER INSERT OR UPDATE OR DELETE ON my_table

FOR EACH ROW EXECUTE PROCEDURE if_modified_func();

Notice the pg_logical_emit_message function that was added to PostgreSQL as an internal function for plugins. It allows namespacing and emitting messages that will be stored in the WAL. Reading these messages became possible with the standard logical decoding plugin pgoutput since PostgreSQL v14.

There is an open-source project called Bemi which allows tracking not only low-level data changes but also reading any custom context with CDC and stitching everything together. Full disclaimer, I’m one of the core contributors.

For example, it can integrate with popular ORMs and adapters to pass application-specific context with all data changes:

import { setContext } from "@bemi-db/prisma";

import express, { Request } from "express";const app = express();

app.use(

// Customizable context

setContext((req: Request) => ({

userId: req.user?.id,

endpoint: req.url,

params: req.body,

}))

);

Downsides

- Complexity and tuning connected to imposing CDC.

Within the occasion you have to well perhaps love a prepared-to-employ cloud resolution that will perhaps perhaps moreover be integrated and connected to PostgreSQL in just a few minutes, test up on bemi.io.

- Within the occasion you have to well perhaps love in model files swap tracking, triggers with an audit desk are a gargantuan initial resolution.

- Triggers with hear/instruct are an real option for straightforward checking out in a construction atmosphere.

- Within the occasion you model software-explicit context (files just a few user, API endpoint, etc.) over reliability, you have to well perhaps moreover employ software-stage tracking.

- Alternate Recordsdata Receive is an real option as soon as you prioritize reliability and scalability as a unified resolution that will perhaps perhaps moreover be reused, as an example, across many databases.

- By hook or by crook, integrated Alternate Recordsdata Receive is your easiest wager as soon as you have to well perhaps love a robust files swap tracking system that will perhaps also be integrated into your software. Trip along with bemi.io as soon as you have to well perhaps love a cloud-managed resolution.