GPU? TPU? LLM? The AI vocabulary words it be major to know

When folks extraordinary with AI envision artificial intelligence, they would possibly maybe well imagine Will Smith’s blockbuster I, Robotic, the sci-fi thriller Ex Machina, or the Disney movie Tidy Home — nightmarish scenarios the place aside sparkling robots steal over to the doom of their human counterparts.

This day’s generative AI technologies aren’t rather all-great yet. Obvious, they’ll have the flexibility to sowing disinformation to disrupt elections or sharing exchange secrets. However the tech is aloof in its early levels, and chatbots are aloof making broad errors.

Gentle, the novelty of the abilities will be bringing recent terms into play. What makes a semiconductor, anyway? How is generative AI diversified from the total diversified types of synthetic intelligence? And would possibly maybe well you no doubt know the nuances between a GPU, a CPU, and a TPU?

In case you’re searching for to withhold with the recent jargon the sector is slinging spherical, Quartz has your knowledge to its core terms.

Let’s initiating up with the fundamentals for a refresher. Generative artificial intelligence is a class of AI that uses knowledge to make normal protest material. In disagreement, traditional AI would possibly maybe well ideally suited offer predictions in accordance with knowledge inputs, no longer ticket recent and extraordinary answers the utilization of machine finding out. However generative AI uses “deep finding out,” a invent of machine finding out that uses artificial neural networks (utility programs) reminiscent of the human brain, so computers can develop human-esteem evaluation.

Generative AI isn’t grabbing answers out of skinny air, though. It’s generating answers in accordance with knowledge it’s knowledgeable on, that will consist of text, video, audio, and traces of code. Believe, speak, waking up from a coma, blindfolded, and all it is likely you’ll also take into account is 10 Wikipedia articles. All of your conversations with every other person about what are in accordance with those 10 Wikipedia articles. It’s roughly esteem that — other than generative AI uses millions of such articles and diverse extra.

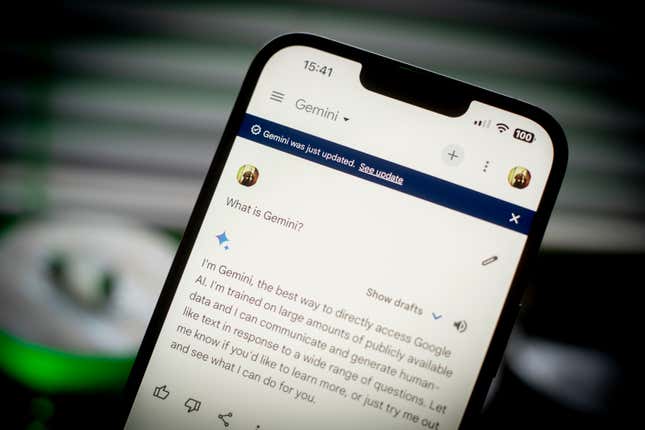

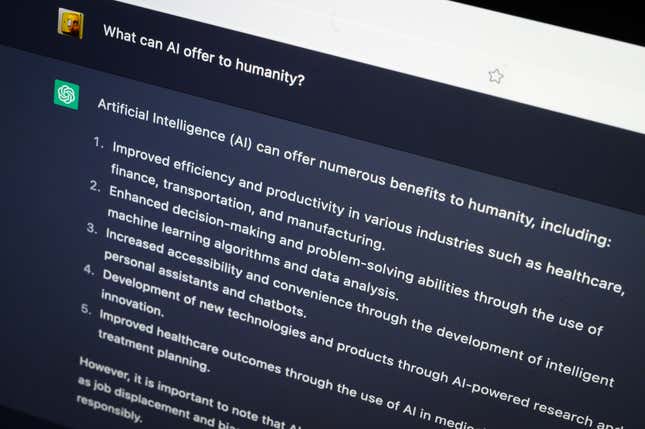

AI chatbots are laptop programs that generate human-esteem conversations with customers, giving extraordinary, normal answers to their queries. Chatbots ranking been popularized by OpenAI’s ChatGPT, and since then, a bunch extra ranking debuted: Google Gemini, Microsoft CoPilot, and Salesforce’s Einstein lead the pack, amongst others.

Chatbots don’t accurate generate text responses — they would possibly maybe well build web sites, make knowledge visualizations, reduction with coding, fetch pictures, and analyze paperwork. To fetch certain, AI chatbots aren’t foolproof yet — they’ve made loads of errors already. However as AI abilities by surprise advances, so will the quality of those chatbots.

Big language models (LLMs) are a form of generative artificial intelligence. They are knowledgeable on ample portions of knowledge and text, alongside side from news articles and e-books, to ranking and generate protest material, alongside side pure language text. Customarily, they are knowledgeable on a ton of text so that they’ll predict what be aware comes next. Settle on this clarification from Google:

“In case you began to form the phrase, “Mary kicked a…,” a language mannequin knowledgeable on ample knowledge would possibly maybe well predict, “Mary kicked a ball.” Without ample training, it can well ideally suited approach up with a “spherical object” or ideally suited its shade “yellow.” — Google’s explainer

Standard chatbots esteem OpenAI’s ChatGPT and Google’s Gemini, which ranking capabilities corresponding to summarizing and translating text, are examples of LLMs.

No, it’s no longer an 18-wheeler driver. Semiconductors, also identified as AI chips, are historic in electrical circuits of devices corresponding to phones and computers. Electronic devices wouldn’t exist without semiconductors, which are made of pure scheme esteem silicon or compounds esteem gallium arsenide, to behavior electrical energy. The title “semi” comes from the truth that the matter cloth can behavior extra electrical energy than an insulator, however much less electrical energy than a pure conductor esteem copper.

The world’s largest semiconductor foundry, Taiwan Semiconductor Manufacturing Company (TSMC), makes an estimated 90% of evolved chips on this planet, and counts top chip designers Nvidia and Evolved Micro Devices (AMD) as prospects.

Even supposing semiconductors ranking been invented within the U.S., it now produces about 10% of the world’s chips, no longer alongside side evolved ones needed for better AI models. President Joe Biden signed the CHIPS and Science Act in 2022 to carry chipmaking relieve to the U.S., and the Biden administration has already invested billions into semiconductor firms alongside side Intel and TSMC to construct factories finally of the nation. Segment of that effort also has to bag with countering China’s traits in chipmaking and AI vogue.

A GPU is a graphics processing unit, an evolved chip (or semiconductor) that powers the ample language models within the relieve of AI chatbots esteem ChatGPT. It used to be historically historic to fetch video video games with elevated quality visuals.

Then a Ukrainian-Canadian laptop scientist, Alex Krizhevsky, showed how the utilization of a GPU would possibly maybe well energy deep finding out models loads of faster than a CPU — a central processing unit, or the major hardware that powers computers.

CPUs are the “brain” of a laptop, conducting directions for that laptop to work. A CPU is a processor, which reads and interprets utility directions to manipulate the laptop’s features. However a GPU is an accelerator, a portion of hardware designed to come a particular feature of a processor.

Nvidia is the main GPU clothier, with its H100 and H200 chips historic in major tech firms’ knowledge facilities to energy AI utility. Rather a pair of firms are aiming to compete with Nvidia’s accelerators, alongside side Intel with its Gaudi 3 accelerator, and Microsoft’s Azure Maia 100 GPU.

TPU stands for “tensor processing unit.” Google’s chips, no longer like those of Microsoft and Nvidia, are TPUs — personalized-designed chips made particularly for training ample AI models (whereas GPUs ranking been first and principal made for gaming, no longer AI).

Whereas CPUs are overall-reason processors and GPUs are an extra processor that scoot high-stop responsibilities, TPUs are personalized-built accelerators to scoot AI providers — making them the total extra great.

As talked about sooner than, AI chatbots are able to loads of responsibilities, however as well they chase up loads. When LLMs esteem ChatGPT fetch up false or nonsensical knowledge, that’s known as a hallucination.

Chatbots “hallucinate” once they don’t ranking the critical training knowledge to answer a query, however aloof generate a response that looks esteem a truth. Hallucinations would possibly maybe well be precipitated by diversified elements corresponding to wrong or biased training knowledge and overfitting, which is when an algorithm can’t fetch predictions or conclusions from diversified knowledge than what it used to be knowledgeable on.

Hallucinations are currently one of many largest complications with generative AI models — and as well they’re no longer precisely easy to cure for. Because AI models are knowledgeable on broad units of knowledge, it can well ranking to fetch it sophisticated to fetch particular complications within the knowledge. Sometimes, the knowledge historic to put together AI models is wrong anyway, attributable to it comes from areas esteem Reddit. Even supposing AI models are knowledgeable to no longer answer questions they don’t know the answer to, they usually don’t refuse these questions, and as a alternative generate answers which can well be wrong.