“The king is uninteresting”—Claude 3 surpasses GPT-4 on Chatbot Area for the first time

The battle for AI vibes —

Anthropic’s Claude 3 is first to united states GPT-4 since start of Chatbot Area in Could perhaps ’23.

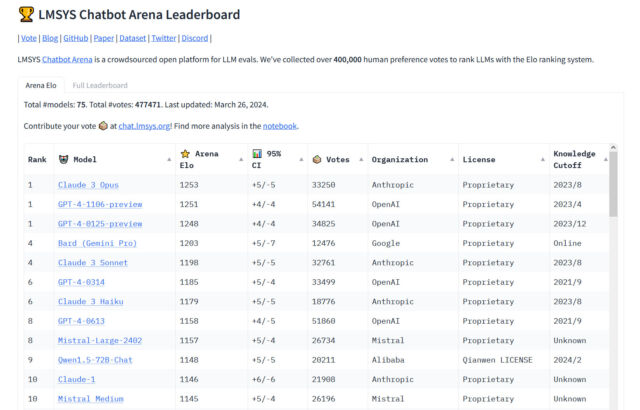

On Tuesday, Anthropic’s Claude 3 Opus big language mannequin (LLM) surpassed OpenAI’s GPT-4 (which powers ChatGPT) for the first time on Chatbot Area, a popular crowdsourced leaderboard susceptible by AI researchers to gauge the relative capabilities of AI language units. “The king is uninteresting,” tweeted draw developer Cut Dobos in a post comparing GPT-4 Turbo and Claude 3 Opus that has been making the rounds on social media. “RIP GPT-4.”

Since GPT-4 used to be incorporated in Chatbot Area around Could perhaps 10, 2023 (the leaderboard launched Could perhaps 3 of that year), diversifications of GPT-4 bear consistently been on the end of the chart till now, so its defeat within the Area is a significant moment within the somewhat quick historic previous of AI language units. One among Anthropic’s smaller units, Haiku, has also been turning heads with its performance on the leaderboard.

“For the first time, the fitting available units—Opus for developed initiatives, Haiku for tag and efficiency—are from a seller that is now no longer OpenAI,” just AI researcher Simon Willison suggested Ars Technica. “That’s reassuring—we all profit from a differ of high vendors in this residence. However GPT-4 is over a year extinct at this point, and it took that year for somebody else to expend up.”

Enlarge / A screenshot of the LMSYS Chatbot Area leaderboard exhibiting Claude 3 Opus within the lead in opposition to GPT-4 Turbo, updated March 26, 2024.

Benj Edwards

Chatbot Area is roam by Trim Model Techniques Organization (LMSYS ORG), a be taught organization dedicated to open units that operates as a collaboration between students and college at University of California, Berkeley, UC San Diego, and Carnegie Mellon University.

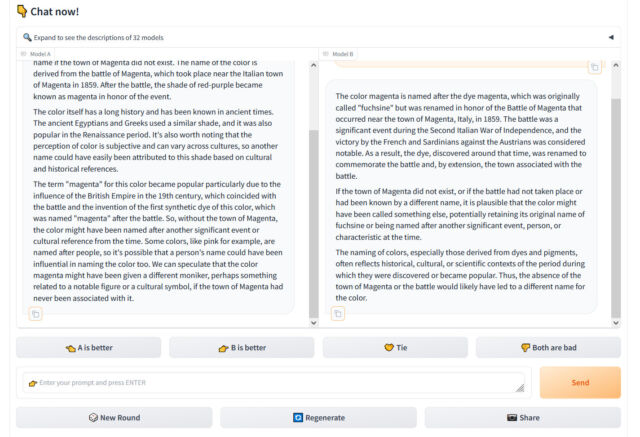

We profiled how the positioning works in December, but briefly, Chatbot Area provides a user visiting the web assign with a chat enter box and two windows exhibiting output from two unlabeled LLMs. The user’s job it to price which output is extra healthy basically basically based on any criteria the user deems most match. By hundreds of those subjective comparisons, Chatbot Area calculates the “ideal” units in aggregate and populates the leaderboard, updating it over time.

Chatbot Area is wanted because researchers and customers alike continuously procure frustration in looking to measure the performance of AI chatbots, whose wildly varying outputs are spirited to quantify. In fact, we wrote about how notoriously spirited it’s to objectively benchmark LLMs in our files share in regards to the start of Claude 3. For that story, Willison emphasized the important position of “vibes,” or subjective emotions, in determining the quality of a LLM. “One more case of ‘vibes’ as a key belief in trendy AI,” he said.

Enlarge / A screenshot of Chatbot Area on March 27, 2024 exhibiting the output of two random LLMs which had been requested, “Would the coloration be known as ‘magenta’ if the city of Magenta did no longer exist?”

Benj Edwards

The “vibes” sentiment is standard within the AI residence, the assign numerical benchmarks that measure files or take a look at-taking potential are recurrently cherry-picked by vendors to construct their results gaze extra favorable. “Proper had a lengthy coding session with Claude 3 opus and man does it absolutely crush gpt-4. I don’t deem standard benchmarks attain this mannequin justice,” tweeted AI draw developer Anton Bacaj on March 19.

Claude’s upward thrust might perhaps perhaps give OpenAI stop, but as Willison talked about, the GPT-4 family itself (even though updated diverse times) is over a year extinct. Currently, the Area lists four diverse versions of GPT-4, which signify incremental updates of the LLM that glean frozen in time because every has a clear output model, and a few developers utilizing them with OpenAI’s API need consistency so their apps constructed on high of GPT-4’s outputs agree with now no longer break.

These consist of GPT-4-0314 (the “fashioned” version of GPT-4 from March 2023), GPT-4-0613 (a snapshot of GPT-4 from June 13, 2023, with “improved feature calling red meat up,” in step with OpenAI), GPT-4-1106-preview (the start version of GPT-4 Turbo from November 2023), and GPT-4-0125-preview (basically the most recent GPT-4 Turbo mannequin, meant to slash cases of “laziness” from January 2024).

Unruffled, even with four GPT-4 units on the leaderboard, Anthropic’s Claude 3 units had been creeping up the charts consistently since their start earlier this month. Claude 3’s success among AI assistant customers already has some LLM customers changing ChatGPT in their each day workflow, doubtlessly eating away at ChatGPT’s market part. On X, draw developer Pietro Schirano wrote, “Truthfully, the wildest part about this complete Claude 3 > GPT-4 is how easy it’s to beautiful… swap??”

Google’s equally capable Gemini Advanced has been gaining traction apart from within the AI assistant residence. That might perhaps perhaps perhaps also build OpenAI on guard for now, but within the lengthy roam, the corporate is prepping fresh units. It is anticipated to begin a critical fresh successor to GPT-4 Turbo (whether named GPT-4.5 or GPT-5) sometime this year, presumably within the summer season. It be obvious that the LLM residence will doubtless be beefy of rivals within the meanwhile, that would also construct for additional attention-grabbing shakeups on the Chatbot Area leaderboard within the months and future years support.