The Download: head transplants, and filtering sounds with AI

That is this day’s version of The Download, our weekday e-newsletter that provides a on a typical foundation dose of what is going on in the enviornment of know-how.

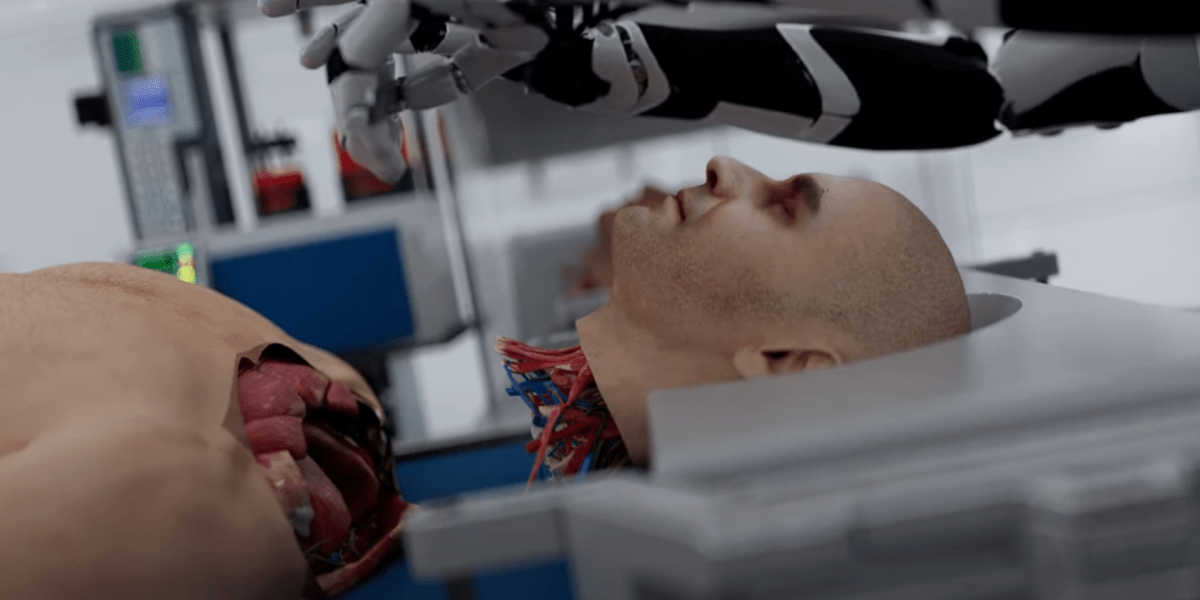

That viral video showing a head transplant is a faux. On the replacement hand it will be staunch in some unspecified time in the future.

An inviting video posted this week has a advise-over that sounds admire a slack-evening TV advert, nevertheless the pitch is straight out of the a ways future. The fingers of an octopus-admire robotic surgeon swirl, impulsively striking off the head of a demise man and placing it onto a young, healthy physique.

That is BrainBridge, the inviting video claims—“the enviornment’s first innovative theory for a head transplant machine, which uses pronounce-of-the-art robotics and synthetic intelligence to habits total head and face transplantation.”

BrainBridge is now not a staunch company—it’s now not incorporated any place. Yet it’s now not merely a titillating murals. This video is better understood as the most well-known public billboard for a massively controversial map to defeat death that’s currently been gaining consideration among some lifestyles-extension proponents and entrepreneurs. Read the beefy story.

—Antonio Regalado

Noise-canceling headphones exercise AI to let a single advise thru

As a lot as the moment lifestyles is noisy. Even as you don’t admire it, noise-canceling headphones can decrease the sounds in your ambiance. But they muffle sounds indiscriminately, so probabilities are you’ll well perchance without peril end up lacking something you indubitably are in search of to hear.

A brand unique prototype AI procedure for such headphones goals to solve this. Called Purpose Speech Hearing, the procedure provides users the flexibility to purchase a particular person whose advise will dwell audible even when all numerous sounds are canceled out.

Despite the indisputable truth that the know-how is in the meanwhile a proof of theory, its creators snort they are in talks to embed it in smartly-liked producers of noise-canceling earbuds and are also working to fabricate it accessible for hearing aids. Read the beefy story.

—Rhiannon Williams

Splashy breakthroughs are thrilling, nevertheless folks with spinal wire accidents want extra

—Cassandra Willyard

This week, I wrote about an external stimulator that delivers electrical pulses to the spine to support toughen hand and arm characteristic in these who’re tremulous. This isn’t a cure. In a whole lot of circumstances the beneficial properties had been somewhat modest.

The watch didn’t garner as mighty media consideration as previous, mighty smaller stories that centered on helping folks with paralysis trip. Tech that lets in folks to form a minute bit faster or set their hair in a ponytail unaided staunch doesn’t possess the identical appeal.

For the these who possess spinal wire accidents, on the replacement hand, incremental beneficial properties can possess a broad impact on quality of lifestyles. So who does this tech in actuality wait on? Read the beefy story.

This story is from The Checkup, our weekly health and biotech e-newsletter. Sign in to receive it in your inbox each Thursday.

The must-reads

I’ve combed the web to search out you this day’s most pleasurable/crucial/provoking/spell binding reviews about know-how.

1 Google’s AI search is advising folks to set glue on pizza

These instruments clearly aren’t ready to supply billions of users with staunch solutions. (The Verge)

+ That $60 million Google paid Reddit for its knowledge clear appears to be questionable. (404 Media)

+ But who’s legally to blame here? (Vox)

+ Why you shouldn’t believe AI search engines. (MIT Technology Evaluate)

2 Russia is increasingly extra interfering with Ukraine’s Starlink provider

It’s disrupting Ukraine’s capability to in finding intelligence and habits drone attacks. (NYT $)

3 Taiwan is ready to shut down its chipmaking machines if China invades

China is in the meanwhile circling the island on militia workout routines. (Bloomberg $)

+ In the intervening time, China’s PC makers are on the up. (FT $)

+ What’s subsequent in chips. (MIT Technology Evaluate)

4 X is planning on hiding users’ likes

Elon Musk wants to serve users to admire ‘edgy’ whisper material without distress. (Insider $)

5 The scammer who cloned Joe Biden’s advise would be fined $6 million

Regulators are in search of to fabricate it sure that political AI manipulation is perchance now not tolerated. (TechCrunch)

+ He’s due to the appear in court subsequent month. (Reuters)

+ Meta says AI-generated election whisper material is now not occurring at a “systemic level.” (MIT Technology Evaluate)

6 NSO Neighborhood’s broken-down CEO is staging a comeback

Shalev Huloi resigned after the US blacklisted the corporate. (The Intercept)

7 Rivers in Alaska are running orange

It’s extremely likely that climate alternate is to blame. (WP $)

+ It’s having a watch unlikely that we’re going to limit global warming to 1.5°C. (Contemporary Scientist $)

8 We’re studying extra about one amongst the enviornment’s rarest parts

Promethium is incredibly radioactive, and extraordinarily unstable. (Contemporary Scientist $)

9 Young folks can’t in actuality change into tune lovers without a phone

Without cassette gamers or CDs, streaming appears the greatest risk.(The Guardian)

10 AI art will continually watch cheap 🖼️

It’s no change for the staunch deal. (Vox)

+ This artist is dominating AI-generated art. And he’s now not jubilant about it. (MIT Technology Evaluate)

Quote of the day

“Naming space as a warfighting domain used to be roughly forbidden, nevertheless that’s changed.”

—Air Drive Traditional Charles “CQ” Brown explains how the US is making ready to fight adversaries in space, Ars Technica reviews.

The tall story

How Facebook got hooked on spreading misinformation

March 2021

When the Cambridge Analytica scandal broke in March 2018, it can well kick off Facebook’s glorious publicity disaster so a ways. It compounded fears that the algorithms that resolve what folks witness had been amplifying faux info and abominate speech, and precipitated the corporate to originate a team with a directive that used to be a minute bit imprecise: to explore the societal impact of the corporate’s algorithms.

Joaquin Quiñonero Candela used to be a natural clutch to switch it up. In his six years at Facebook, he’d created a couple of of the most well-known algorithms for focusing on users with whisper material precisely tailor-made to their interests, after which he’d diffused these algorithms throughout the corporate. Now his mandate might perchance well be to fabricate them much less stupid. Then all all over again, his hands had been tied, and the drive to fabricate money got here first. Read the beefy story.

—Karen Hao