DeepMind and Stanford’s unusual robot adjust model educate instructions from sketches

Credit rating: RT-Sketch

Join leaders in Boston on March 27 for an original night of networking, insights, and dialog. Quiz an invite here.

Fresh advances in language and vision devices beget helped secure tremendous progress in increasing robotic programs that can educate instructions from text descriptions or photos. On the unreal hand, there are limits to what language- and image-primarily primarily primarily based instructions can enact.

A unusual gaze by researchers at Stanford College and Google DeepMind suggests utilizing sketches as instructions for robots. Sketches beget nicely to set spatial data to back the robot enact its initiatives without getting perplexed by the clutter of real looking photos or the anomaly of pure language instructions.

The researchers created RT-Sketch, a model that uses sketches to manipulate robots. It performs on par with language- and image-conditioned brokers in customary stipulations and outperforms them in cases the attach language and image dreams tumble short.

Why sketches?

Whereas language is an intuitive approach to specify dreams, it can change into inconvenient when the task requires steady manipulations, akin to inserting objects in explicit preparations.

VB Tournament

The AI Impression Tour – Boston

We’re inflamed for the next quit on the AI Impression Tour in Boston on March 27th. This original, invite-finest event, in partnership with Microsoft, will characteristic discussions on finest practices for data integrity in 2024 and beyond. Dwelling is runt, so query an invite today.

On the unreal hand, photos are atmosphere pleasant at depicting the desired plot of the robot in beefy detail. On the unreal hand, secure admission to to a plot image is normally inconceivable, and a pre-recorded plot image can beget too many runt print. Therefore, a model skilled on plot photos could overfit to its practising data and no longer get a diagram to generalize its capabilities to other environments.

“The original thought of conditioning on sketches no doubt stemmed from early-on brainstorming about how we may allow a robot to clarify meeting manuals, akin to IKEA furnishings schematics, and originate the essential manipulation,” Priya Sundaresan, Ph.D. student at Stanford College and lead author of the paper, told VentureBeat. “Language is normally extraordinarily ambiguous for all these spatially steady initiatives, and a speak of the desired scene is no longer accessible beforehand.”

The team made up our minds to expend sketches as they are minimal, easy to amass, and nicely to set with data. On the one hand, sketches provide spatial data that is seemingly to be exhausting to explicit in pure language instructions. On the unreal, sketches can provide explicit runt print of desired spatial preparations without wanting to preserve pixel-level runt print as in a speak. On the same time, they are able to back devices study to divulge which objects are connected to the task, which ends up in extra generalizable capabilities.

“We see sketches as a stepping stone in the direction of extra convenient but expressive suggestions for humans to specify dreams to robots,” Sundaresan acknowledged.

RT-Sketch

RT-Sketch is one among many unusual robotics programs that expend transformers, the deep discovering out structure veteran in tremendous language devices (LLMs). RT-Sketch is in conserving with Robotics Transformer 1 (RT-1), a model developed by DeepMind that takes language instructions as enter and generates commands for robots. RT-Sketch has modified the structure to change pure language enter with visible dreams, including sketches and photos.

To educate the model, the researchers veteran the RT-1 dataset, which incorporates 80,000 recordings of VR-teleoperated demonstrations of initiatives akin to transferring and manipulating objects, opening and closing cabinets, and extra. On the unreal hand, first, they’d to originate sketches from the demonstrations. For this, they selected 500 practising examples and created hand-drawn sketches from the final video frame. They then veteran these sketches and the corresponding video frame alongside with other image-to-sketch examples to coach a generative adversarial network (GAN) that can originate sketches from photos.

GAN network generates sketches from photos

They veteran the GAN network to originate plot sketches to coach the RT-Sketch model. They furthermore augmented these generated sketches with diverse colorspace and affine transforms, to simulate variations in hand-drawn sketches. The RT-Sketch model modified into as soon as then skilled on the original recordings and the sketch of the plot bid.

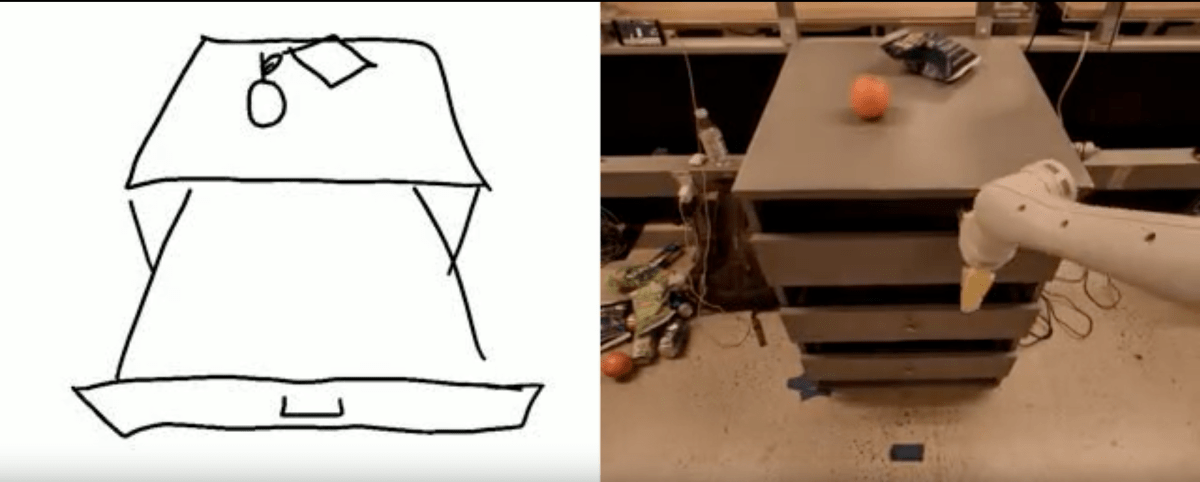

The skilled model takes a speak of the scene and a tough sketch of the desired plot of objects. In response, it generates a series of robot commands to succeed in the desired plot.

“RT-Sketch will be functional in spatial initiatives the attach describing the intended plot would preserve longer to claim in phrases than a sketch, or in circumstances the attach a speak could no longer be accessible,” Sundaresan acknowledged.

RT-Sketch takes in visible instructions and generates circulation commands for robots

As an illustration, in declare for you to space a dinner table, language instructions esteem “attach the utensils next to the plate” will be ambiguous with extra than one sets of forks and knives and loads capability placements. Utilizing a language-conditioned model would require extra than one interactions and corrections to the model. On the same time, having a speak of the desired scene would require fixing the task in attain. With RT-Sketch, you should as yet any other provide a rapid drawn sketch of the approach you quiz the objects to be organized.

“RT-Sketch may furthermore be applied to scenarios akin to arranging or unpacking objects and furnishings in a unusual bid with a cellular robot, or any lengthy-horizon initiatives akin to multi-step folding of laundry the attach a sketch can back visually bring step-by-step subgoals,” Sundaresan acknowledged.

RT-Sketch in circulation

The researchers evaluated RT-Sketch in assorted scenes all over six manipulation skills, including transferring objects attain to 1 yet any other, knocking cans sideways or inserting them simply, and closing and opening drawers.

RT-Sketch performs on par with image- and language-conditioned devices for tabletop and countertop manipulation. Meanwhile, it outperforms language-conditioned devices in scenarios the attach dreams can’t be expressed clearly with language instructions. It’s furthermore simply for scenarios the attach the atmosphere is cluttered with visible distractors and image-primarily primarily primarily based instructions can confuse image-conditioned devices.

“This implies that sketches are a gay medium; they are minimal ample to lead optimistic of being tormented by visible distractors, but are expressive ample to preserve semantic and spatial consciousness,” Sundaresan acknowledged.

One day, the researchers will detect the broader positive elements of sketches, akin to complementing them with other modalities esteem language, photos, and human gestures. DeepMind already has loads of alternative robotics devices that expend multi-modal devices. This could also be attention-grabbing to see how they would be improved with the findings of RT-Sketch. The researchers will furthermore detect the flexibility of sketches beyond simply shooting visible scenes.

“Sketches can bring circulation by technique of drawn arrows, subgoals by technique of partial sketches, constraints by technique of scribbles, or even semantic labels by technique of scribbled text,” Sundaresan acknowledged. “All of those can encode functional data for downstream manipulation that now we beget yet to detect.”

VentureBeat’s mission is to be a digital town square for technical decision-makers to create data about transformative endeavor know-how and transact. Undercover agent our Briefings.