Meta Partners with Stanford on Dialogue board Spherical Responsible AI Construction

Amid ongoing debate relating to the parameters that must quiet be plight round generative AI, and the scheme it’s susceptible, Meta now no longer too long ago partnered with Stanford’s Deliberative Democracy Lab to habits a neighborhood forum on generative AI, with a opinion to to find solutions from real customers as to their expectations and concerns round responsible AI construction.

The forum incorporated responses from over 1,500 folks from Brazil, Germany, Spain and the US, and difficult within the predominant disorders and challenges that folks note in AI construction.

And there are some engaging notes around the final public thought of AI, and its advantages.

The topline results, as highlighted by Meta, mask that:

- The bulk of participants from every nation deem that AI has had a optimistic affect

- The bulk deem that AI chatbots must quiet be in a neighborhood to use previous conversations to beef up responses, as long as folks are told

- The bulk of participants deem that AI chatbots might per chance per chance per chance also impartial even be human-be pleased, as long as folks are told.

Even though the particular detail is engaging.

As that you can note on this situation, the statements that saw essentially the most optimistic and damaging responses were masses of by location. Many participants did replace their opinions on these parts at some level of the process, but it completely is engaging to take hang of into consideration where folks note the advantages and risks of AI right this moment.

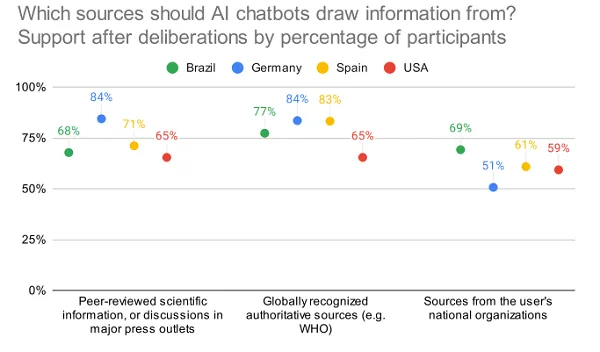

The file also looked at particular person attitudes in the direction of AI disclosure, and where AI instruments must quiet source their files:

Attention-grabbing to present the reasonably low acclaim for these sources within the U.S.

There are also insights on whether or now no longer folks reflect that customers must quiet be in a neighborhood to have romantic relationships with AI chatbots.

Bit uncommon, but it completely is a logical development, and something that can desire to be understanding to be.

One other engaging consideration of AI construction now no longer namely highlighted within the examine is the controls and weightings that every supplier implements within their AI instruments.

Google changed into as soon as now no longer too long ago compelled to converse regret for the misleading and non-representative results produced by its Gemini intention, which leaned too heavily in the direction of various illustration, whereas Meta’s Llama model has also been criticized for producing more sanitized, politically staunch depictions in line with obvious prompts.

Examples be pleased this highlight the affect that the objects themselves can have on the outputs, which is any other key field in AI construction. Ought to companies have such reduction watch over over these instruments? Does there desire to be broader legislation to make optimistic equal illustration and steadiness in every instrument?

These types of questions are now no longer possible to solution, as we don’t fully understand the scope of such instruments as yet, and the scheme they might per chance per chance per chance also impartial affect broader response. But it’s turning into optimistic that we fabricate desire to have some popular guard rails in online page online with a opinion to provide protection to customers in opposition to misinformation and misleading responses.

As such, this is an exciting debate, and it’s worth thinking about what the outcomes mean for broader AI construction.

You are going to be in a neighborhood to read the total forum file right here.